Checkpoints

Write a query to determine available seasons and games

/ 10

Create a labeled machine learning dataset

/ 10

Create a machine learning model

/ 20

Evaluate model performance and create table

/ 10

Using skillful ML model features

/ 10

Train the new model and make evaluation

/ 10

Run a query to create a table ncaa_2018_predictions

/ 10

Run queries to create tables ncaa_2019_tournament and ncaa_2019_tournament_predictions

/ 20

Bracketology with Google Machine Learning

- GSP461

- Overview

- Setup and requirements

- Task 1. Open the BigQuery console

- Task 2. NCAA March Madness

- Task 3. Find the NCAA public dataset in BigQuery

- Task 4. Write a query to determine available seasons and games

- Task 5. Understand machine learning features and labels

- Task 6. Create a labeled machine learning dataset

- Task 7. Create a machine learning model to predict the winner based on seed and team name

- Task 8. Evaluate model performance

- Task 9. Making predictions

- Task 10. How many did our model get right for the 2018 NCAA tournament?

- Task 11. Models can only take you so far...

- Task 12. Using skillful ML model features

- Task 13. Preview the new features

- Task 14. Interpreting selected metrics

- Task 15. Train the new model

- Task 16. Evaluate the new model's performance

- Task 17. Inspect what the model learned

- Task 18. Prediction time!

- Task 19. Prediction analysis:

- Task 20. Where were the upsets in March 2018?

- Task 21. Comparing model performance

- Task 22. Predicting for the 2019 March Madness tournament

- Congratulations!

GSP461

Overview

BigQuery is Google's fully managed, NoOps, low cost analytics database. With BigQuery you can query terabytes and terabytes of data without managing infrastructure or needing a database administrator. BigQuery uses SQL and takes advantage of the pay-as-you-go model. BigQuery allows you to focus on analyzing data to find meaningful insights.

BigQuery ML allows data analysts to use their SQL knowledge to build machine learning models quickly right where their data already lives in BigQuery.

In BigQuery, there is a publicly available dataset for NCAA basketball games, teams, and players. The game data covers play-by-play and box scores back to 2009, as well as final scores back to 1996. Additional data about wins and losses goes back to the 1894-5 season in some teams' cases.

In this lab, you use BigQuery ML to prototype, train, evaluate, and predict the 'winners' and 'losers' between two NCAA basketball tournament teams.

What you'll do

In this lab, you will learn how to:

- Use BigQuery to access the public NCAA dataset

- Explore the NCAA dataset to gain familiarity with the schema and scope of the data available

- Prepare and transform the existing data into features and labels

- Split the dataset into training and evaluation subsets

- Use BigQuery ML to build a model based on the NCAA tournament dataset

- Use your newly created model to predict NCAA tournament winners for your bracket

Prerequisites

This is an intermediate level lab. Before taking it, you should have some experience with SQL and the language's keywords. Familiarity with BigQuery is also recommended. If you need to get up to speed in these areas, you should at a minimum take one of the following labs before attempting this one:

Setup and requirements

Before you click the Start Lab button

Read these instructions. Labs are timed and you cannot pause them. The timer, which starts when you click Start Lab, shows how long Google Cloud resources will be made available to you.

This hands-on lab lets you do the lab activities yourself in a real cloud environment, not in a simulation or demo environment. It does so by giving you new, temporary credentials that you use to sign in and access Google Cloud for the duration of the lab.

To complete this lab, you need:

- Access to a standard internet browser (Chrome browser recommended).

- Time to complete the lab---remember, once you start, you cannot pause a lab.

How to start your lab and sign in to the Google Cloud console

-

Click the Start Lab button. If you need to pay for the lab, a pop-up opens for you to select your payment method. On the left is the Lab Details panel with the following:

- The Open Google Cloud console button

- Time remaining

- The temporary credentials that you must use for this lab

- Other information, if needed, to step through this lab

-

Click Open Google Cloud console (or right-click and select Open Link in Incognito Window if you are running the Chrome browser).

The lab spins up resources, and then opens another tab that shows the Sign in page.

Tip: Arrange the tabs in separate windows, side-by-side.

Note: If you see the Choose an account dialog, click Use Another Account. -

If necessary, copy the Username below and paste it into the Sign in dialog.

{{{user_0.username | "Username"}}} You can also find the Username in the Lab Details panel.

-

Click Next.

-

Copy the Password below and paste it into the Welcome dialog.

{{{user_0.password | "Password"}}} You can also find the Password in the Lab Details panel.

-

Click Next.

Important: You must use the credentials the lab provides you. Do not use your Google Cloud account credentials. Note: Using your own Google Cloud account for this lab may incur extra charges. -

Click through the subsequent pages:

- Accept the terms and conditions.

- Do not add recovery options or two-factor authentication (because this is a temporary account).

- Do not sign up for free trials.

After a few moments, the Google Cloud console opens in this tab.

Open the BigQuery console

- In the Google Cloud Console, select Navigation menu > BigQuery.

The Welcome to BigQuery in the Cloud Console message box opens. This message box provides a link to the quickstart guide and the release notes.

- Click Done.

The BigQuery console opens.

Task 1. Open the BigQuery console

-

From the Cloud Console, open the Navigation menu and select BigQuery.

-

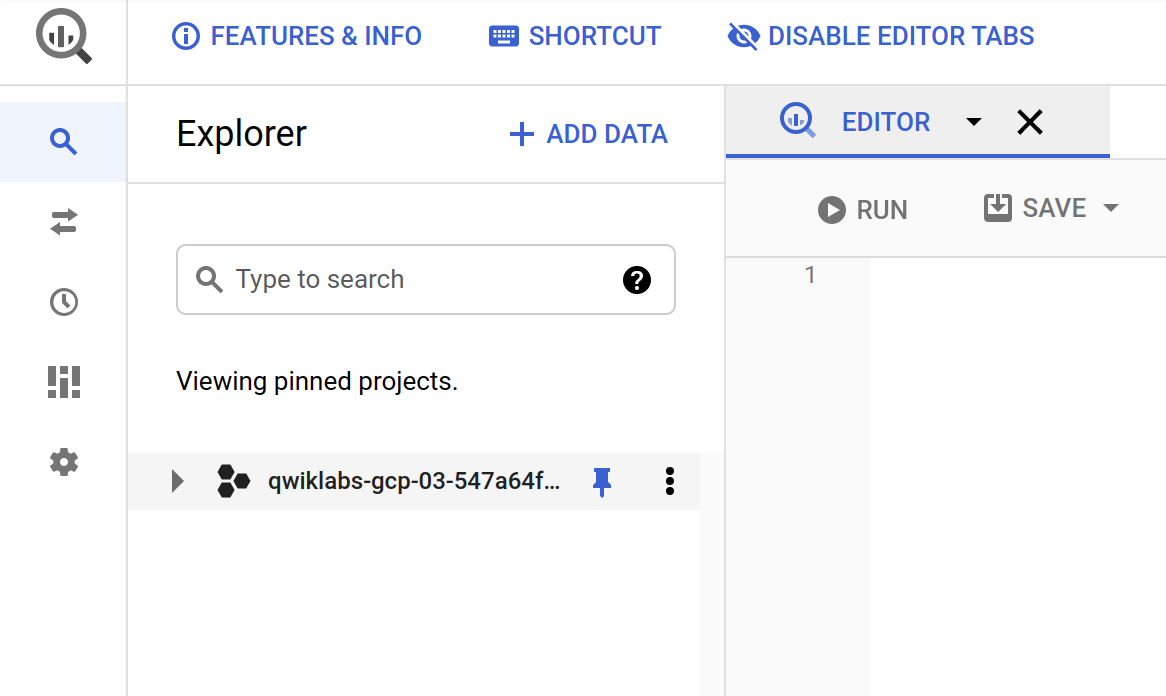

Click DONE to take yourself to the beta UI. Make sure that your Project ID is set in Explorer tab, which should resemble the following:

If you click Expand node arrow next to your project, you won't see any databases or tables there. This is because you haven't added any to your project yet.

Luckily, there are tons of open, public datasets available in BigQuery for you to work with. You will now learn more about the NCAA dataset and then figure out how to add the dataset to your BigQuery project.

Task 2. NCAA March Madness

The National Collegiate Athletic Association (NCAA) hosts two major college basketball tournaments every year in the United States for men's and women's collegiate basketball. For the NCAA Men's tournament in March, 68 teams enter single-elimination games and one team exits as the overall winner of March Madness.

The NCAA offers a public dataset that contains the statistics for men's and women's basketball games and players in for the season and the final tournaments. The game data covers play-by-play and box scores back to 2009, as well as final scores back to 1996. Additional data about wins and losses goes back to the 1894-5 season in some teams' cases.

- Be sure to check out the Google Cloud Marketing Ad campaign for predicting live insights to learn a little bit more about this dataset and what's been done with it and stay up to date with this year's tournament at Google Cloud March Madness Insights.

Task 3. Find the NCAA public dataset in BigQuery

-

Make sure that you are still in the BigQuery Console for this step. In the Explorer tab, click the + ADD button then select Public datasets.

-

In the search bar, type in NCAA Basketball and hit enter. One result will pop up—select it and then click VIEW DATASET:

This will open a new BigQuery tab with the dataset loaded. You can continue working in this tab, or close it and refresh your BigQuery Console in the other tab to reveal your public dataset.

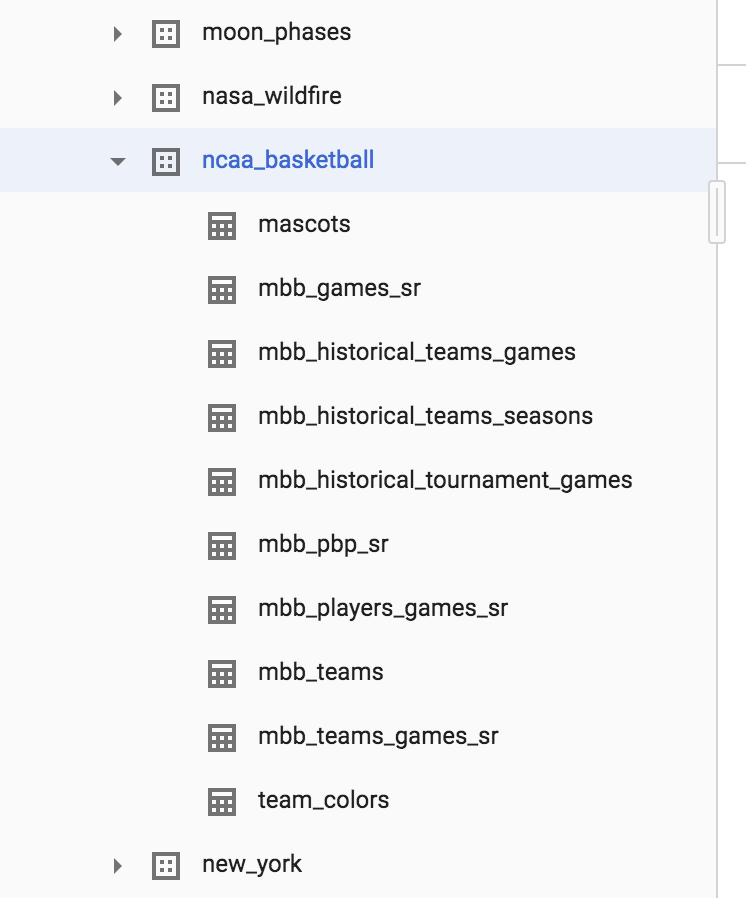

- Expand bigquery-public-data > ncaa_basketball dataset to reveal its tables:

You should see 10 tables in the dataset.

-

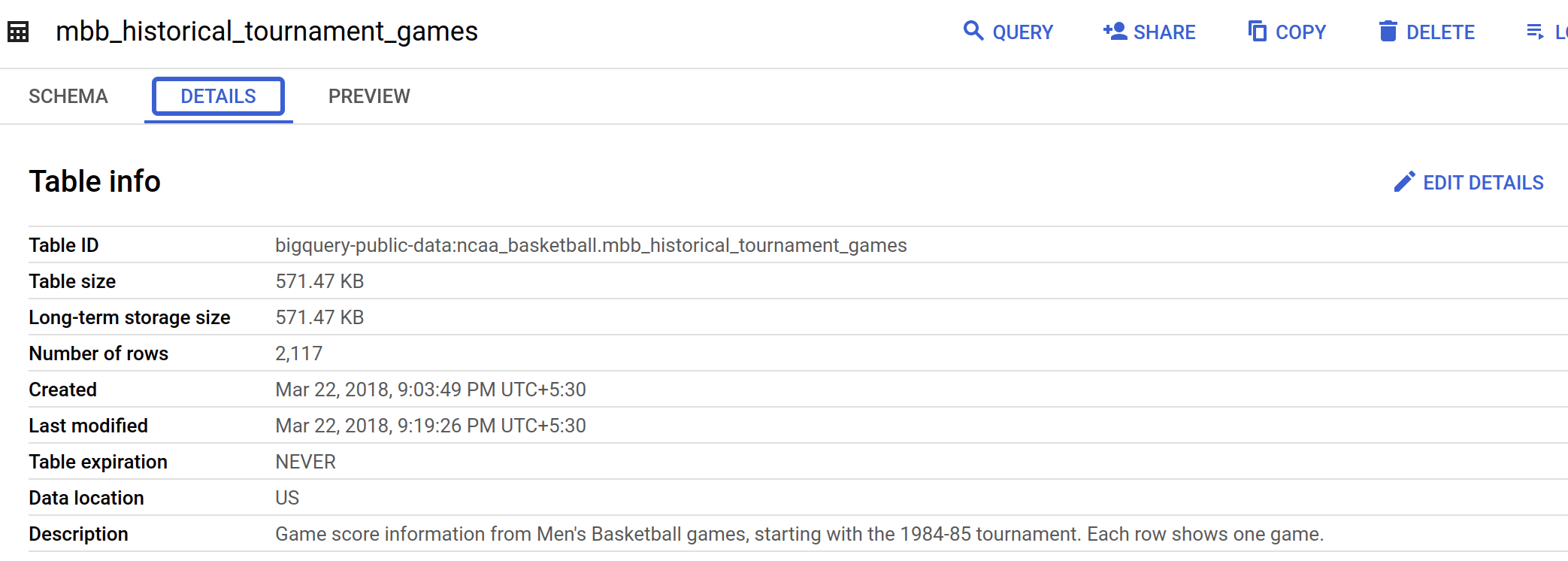

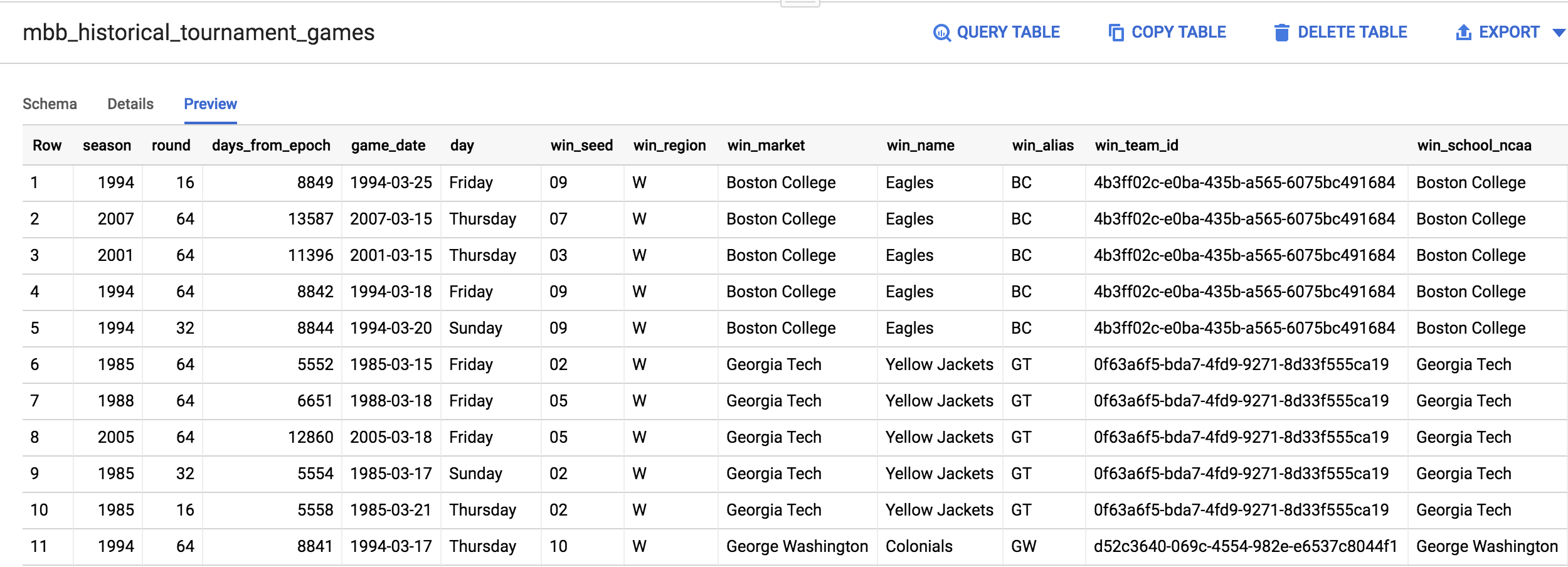

Click on the

mbb_historical_tournament_gamesand then click PREVIEW to see sample rows of data. -

Then click DETAILS to get metadata about the table.

Your page should resemble the following:

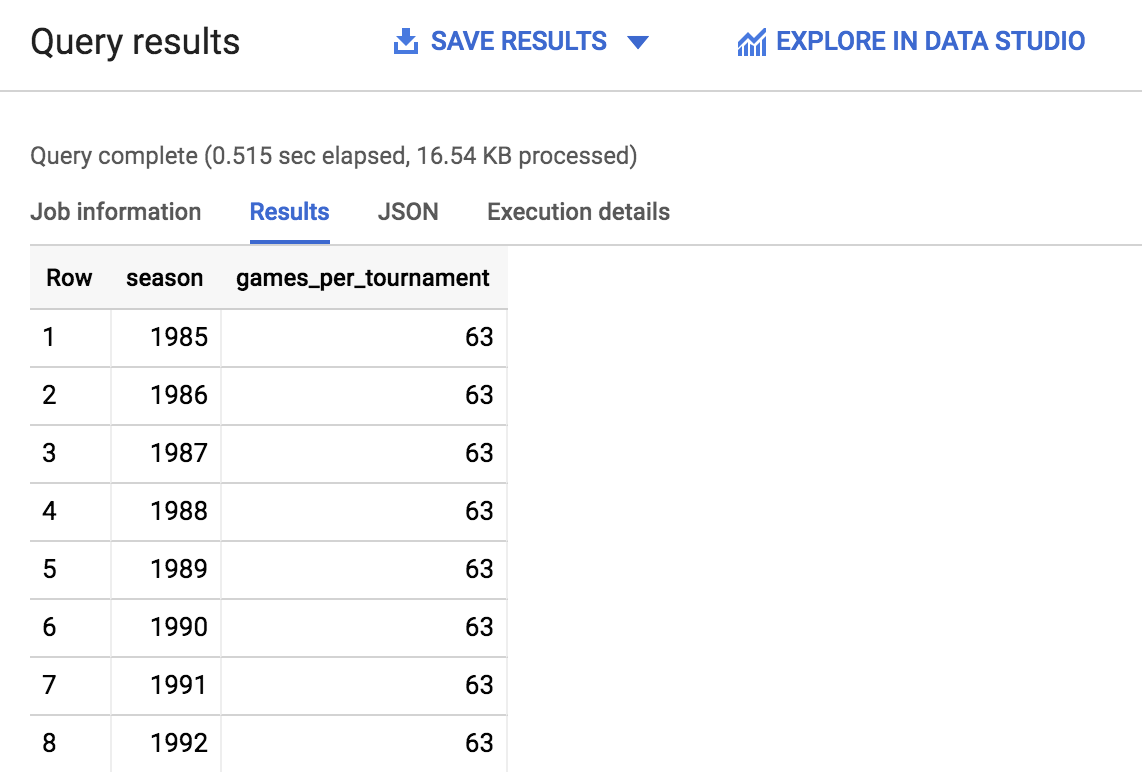

Task 4. Write a query to determine available seasons and games

You will now write a simple SQL query to determine how many seasons and games are available to explore in our mbb_historical_tournament_games table.

- In query EDITOR, which is located above the table details section, copy and paste the following into that field:

- Click RUN. Soon after, you should receive a similar output:

- Scroll through the output and take note of the amount of seasons and the games played per season—you will use that information to answer the following questions. Additionally, you can quickly see how many rows were returned by looking in the lower right near the pagination arrows.

Click Check my progress to verify the objective.

Test your understanding

The following multiple choice questions are used to reinforce your understanding of the concepts covered so far. Answer them to the best of your abilities.

Task 5. Understand machine learning features and labels

The end goal of this lab is to predict the winner of a given NCAA Men's basketball match-up using past knowledge of historical games. In machine learning, each column of data that will help us determine the outcome (win or loss for a tournament game) is called a feature.

The column of data that you are trying to predict is called the label. Machine learning models "learn" the association between features to predict the outcome of a label.

Examples of features for your historical dataset could be:

- Season

- Team name

- Opponent team name

- Team seed (ranking)

- Opponent team seed

The label you will be trying to predict for future games will be the game outcome — whether or not a team wins or loses.

Test your understanding

The following multiple choice questions are used to reinforce your understanding of the concepts covered so far. Answer them to the best of your abilities.

Task 6. Create a labeled machine learning dataset

Building a machine learning model requires a lot of high-quality training data. Fortunately, our NCAA dataset is robust enough where we can rely upon it to build an effective model.

-

Return to the BigQuery Console—you should have left off on the result of the query you ran.

-

From the left-hand menu, open the

mbb_historical_tournament_gamestable by clicking on the table name. Then once it loads, click PREVIEW. Your page should resemble the following:

Test your understanding

The following multiple choice questions are used to reinforce your understanding of the concepts covered so far. Answer them to the best of your abilities.

-

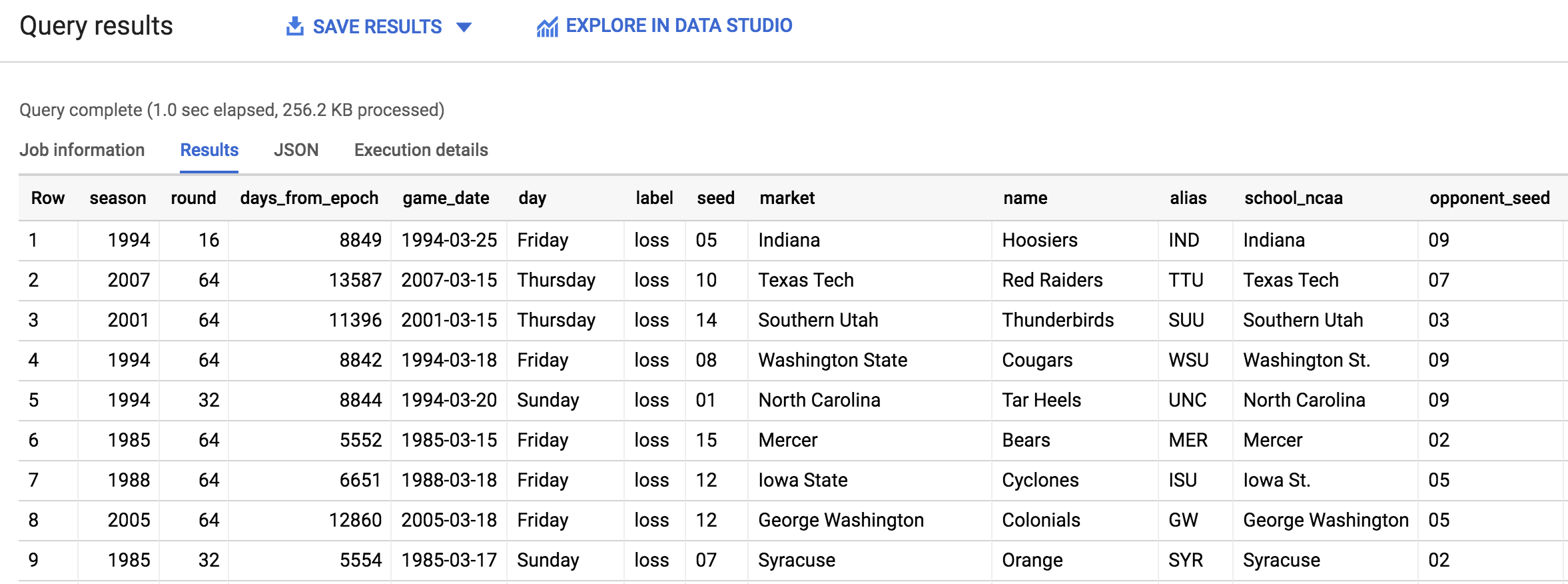

After inspecting the dataset, you'll notice that one row in the dataset has columns for both

win_marketandlose_market. You need to break the single game record into a record for each team so you can label each row as a "winner" or "loser". -

In the query EDITOR, copy and paste the following query and then click RUN:

You should receive the following output:

Click Check my progress to verify the objective.

Now that you know what features are available from the result, answer the following question to reinforce your understanding of the dataset.

Task 7. Create a machine learning model to predict the winner based on seed and team name

Now that we have explored our data, it's time to train a machine learning model.

- Using your best judgment, answer the question below to orient yourself with this section.

Choosing a model type

For this particular problem, you will be building a classification model. Since we have two classes, win or lose, it's also called a binary classification model. A team can either win or lose a match.

If you wanted to, after the lab, you could forecast the total number of points a team will score using a forecasting model but that isn't going to be our focus here.

An easy way to tell if you're forecasting or classifying is to look at the type of label (column) of data you are predicting:

- If it's a numeric column (like units sold or points in a game), you're doing forecasting

- If it's a string value you're doing classification (this row is either this in class or this other class), and

- If you have more than two classes (like win, lose, or tie) you are doing multi-class classification

Our classification model will be doing machine learning with a widely used statistical model called Logistic Regression.

We need a model that generates a probability for each possible discrete label value, which in our case is either a 'win' or a 'loss'. Logistic regression is a good model type to start with for this purpose. The good news for you is that the ML model will do all the math and optimization for you during model training -- it's what computers are really good at!

Creating a machine learning model with BigQuery ML

To create our classification model in BigQuery we simply need to write the SQL statement CREATE MODEL and provide a few options.

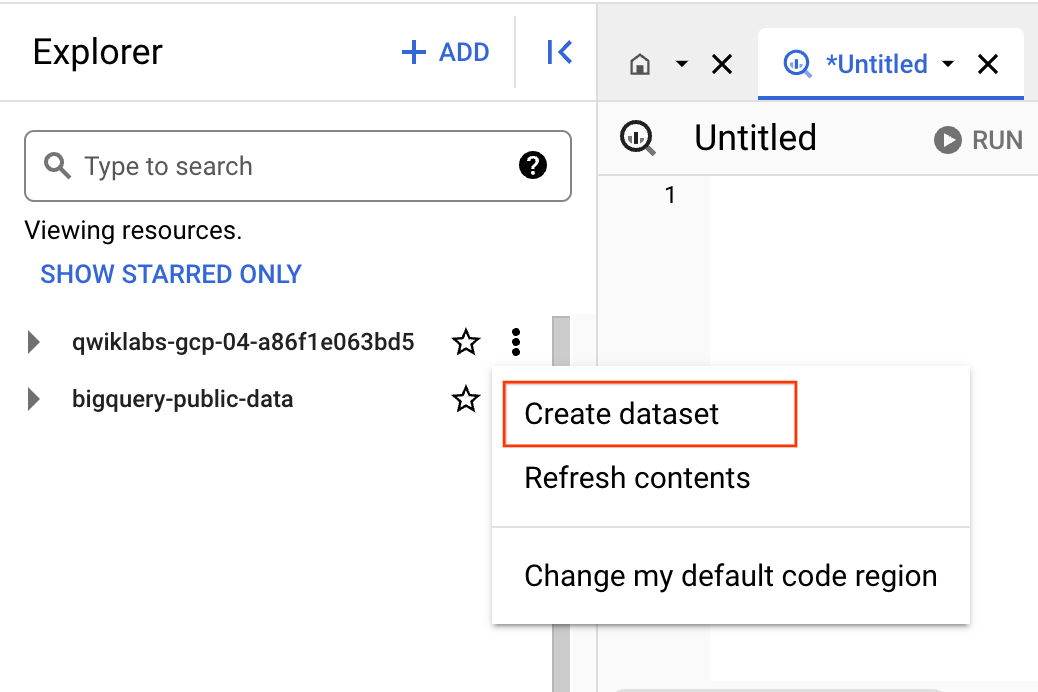

But, before we can create the model, we need a place in our project to store it first.

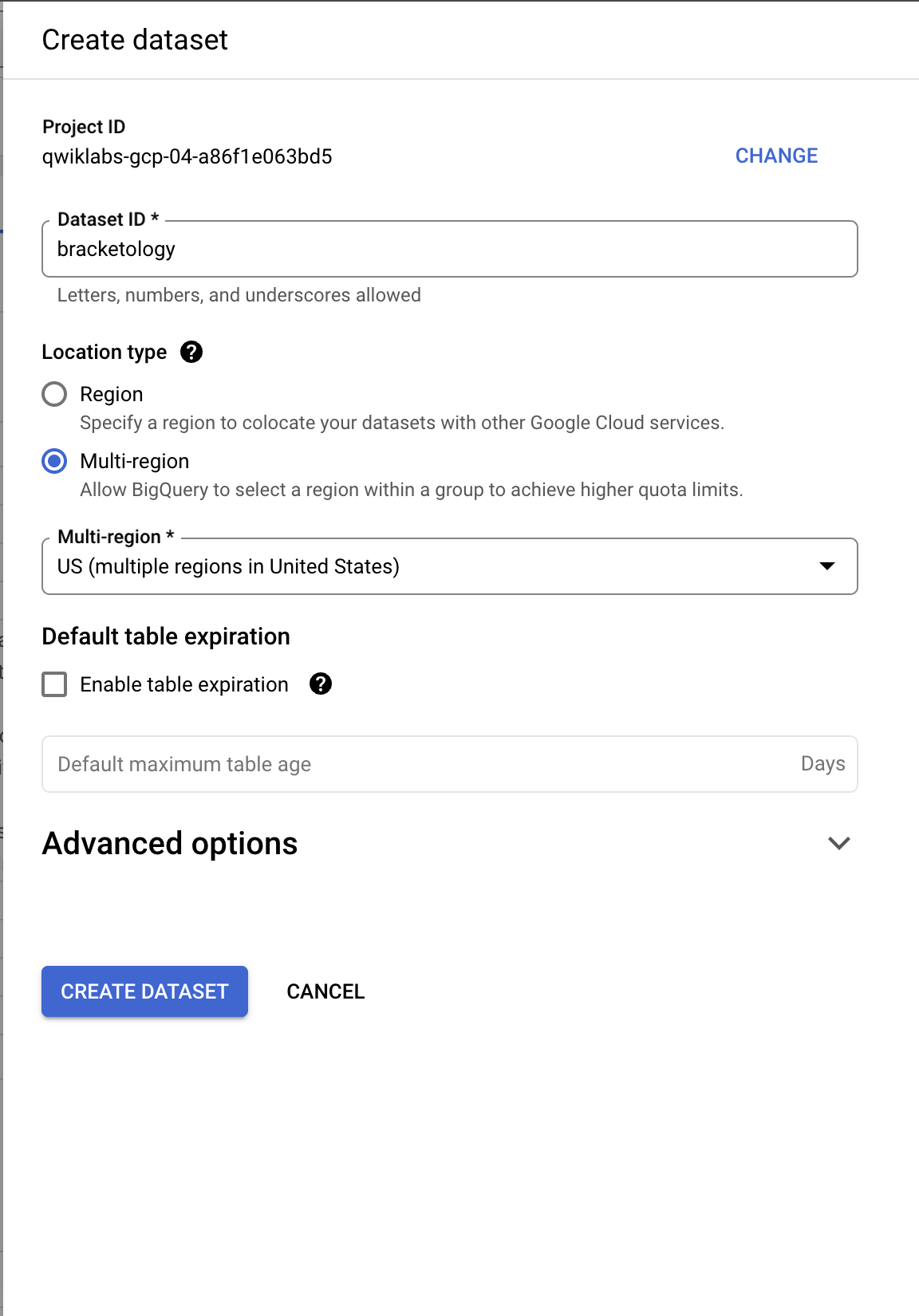

- In the Explorer tab, click on the View actions icon next to your Project ID and select Create dataset.

- This will open a Create dataset dialog. Set your Dataset ID to

bracketologyand click CREATE DATASET.

- Now run the following command in the query EDITOR

In our code you'll notice that creating the model is just a few lines of SQL. One of the most important options is choosing the model type logistic_reg for our classification task.

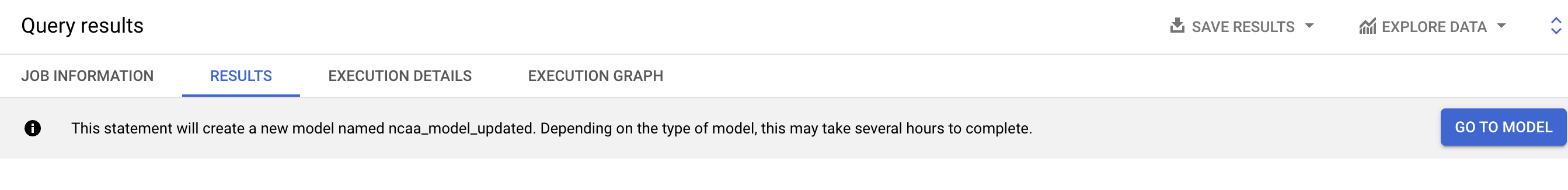

It will take between 3 and 5 minutes to train the model. You should receive the following output when the job is finished:

- Click the Go to Model button on the right-hand side of the console.

Click Check my progress to verify the objective.

View model training details

- Now that you are in the model details, scroll down to the Training options section and see the actual iterations the model performed to train.

If you're experienced with machine learning, note that you can customize all of these hyperparameters (options set before the model runs) by defining their value in the OPTIONS statement.

If you're new to machine learning, BigQuery ML will set smart default values for any options not set.

Refer to the BigQuery ML model options list to learn more.

View model training stats

Machine learning models "learn" the association between known features and unknown labels. As you might intuitively guess, some features like "ranking seed" or "school name" may help determine a win or loss more than other data columns (features) like the day of the week the game is played.

Machine learning models start the training process with no such intuition and will generally randomize the weighting of each feature.

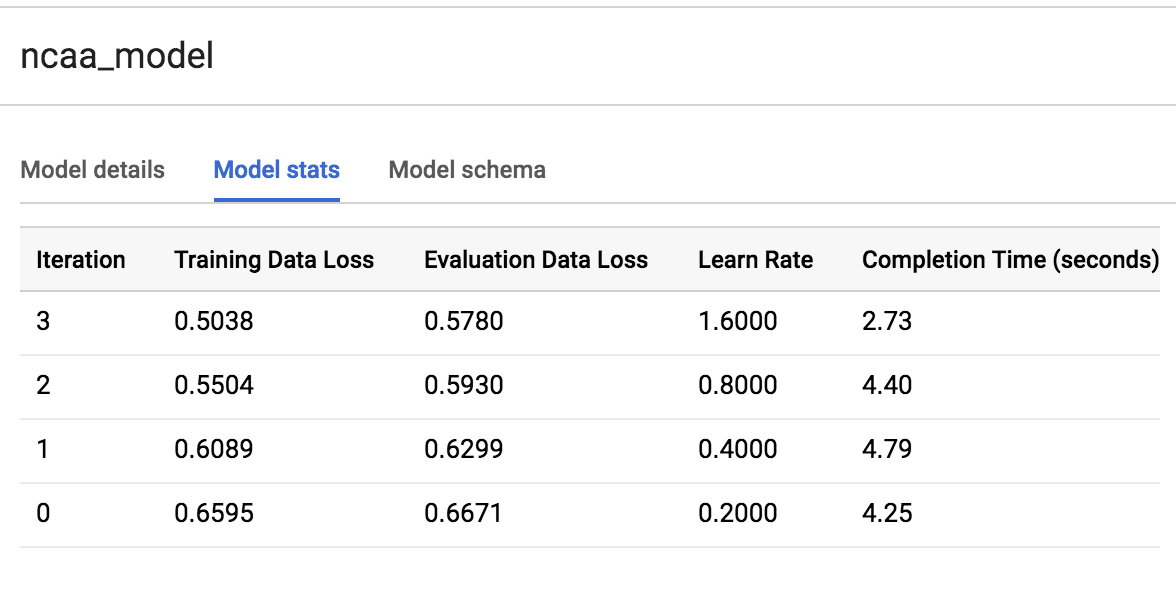

During the training process, the model will optimize a path to it's best possible weighting of each feature. With each run it is trying to minimize Training Data Loss and Evaluation Data Loss.

Should you ever find that the final loss for evaluation is much higher than for training, your model is overfitting or memorizing your training data instead of learning generalizable relationships.

You can view how many training runs the model takes by clicking the TRAINING tab and selecting Table under View as option.

During our particular run, the model completed 3 training iterations in about 20 seconds. Yours will likely vary.

See what the model learned about our features

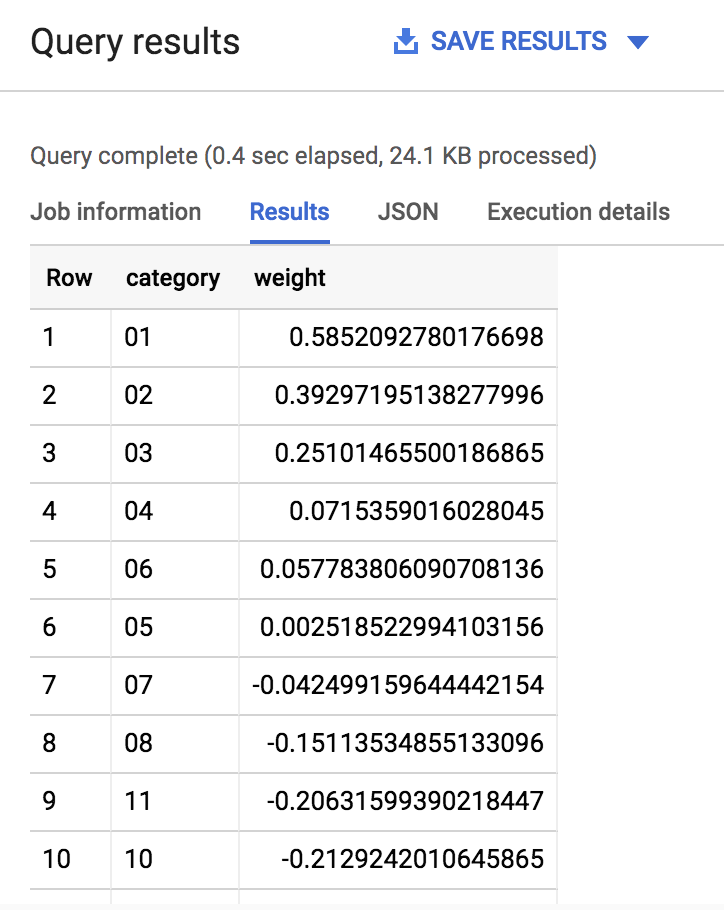

After training, you can see which features provided the most value to the model by inspecting the weights.

- Run the following command in the query EDITOR:

Your output should resemble the following:

As you can see, if the seed of a team is very low (1,2,3) or very high (14,15,16) the model gives it a significant weight (max is 1.0) in determining the win loss outcome. Intuitively this makes sense as we expect very low seed teams to perform well in the tournament.

The real magic of machine learning is that we didn't create a ton of hardcoded IF THEN statements in SQL telling the model IF the seed is 1 THEN gives the team a 80% more chance of winning. Machine learning does away with hardcoded rules and logic and learns these relationships for itself. Check out the BQML syntax weights documentation to learn more.

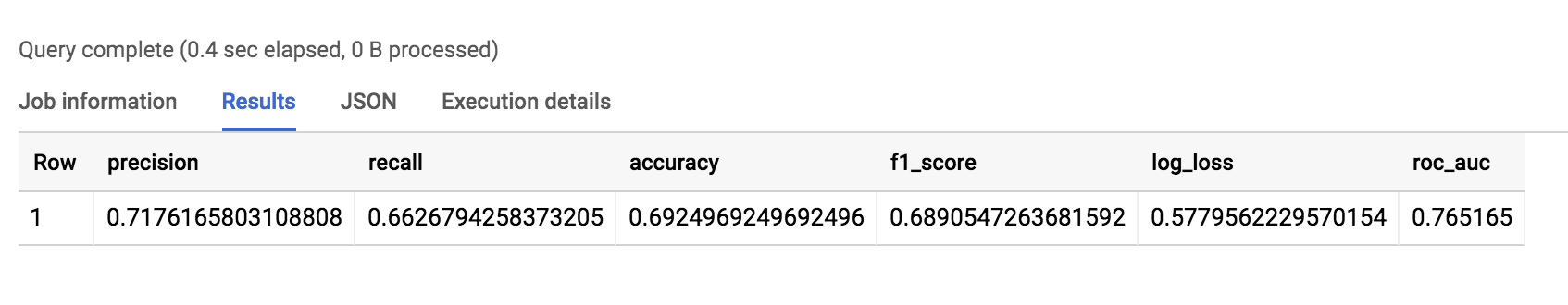

Task 8. Evaluate model performance

To evaluate the model's performance you can run a simple ML.EVALUATE against a trained model.

- Run the following command in the query EDITOR:

You should receive a similar output:

The value will be around 69% accurate. While it's better than a coin flip, there is room for improvement.

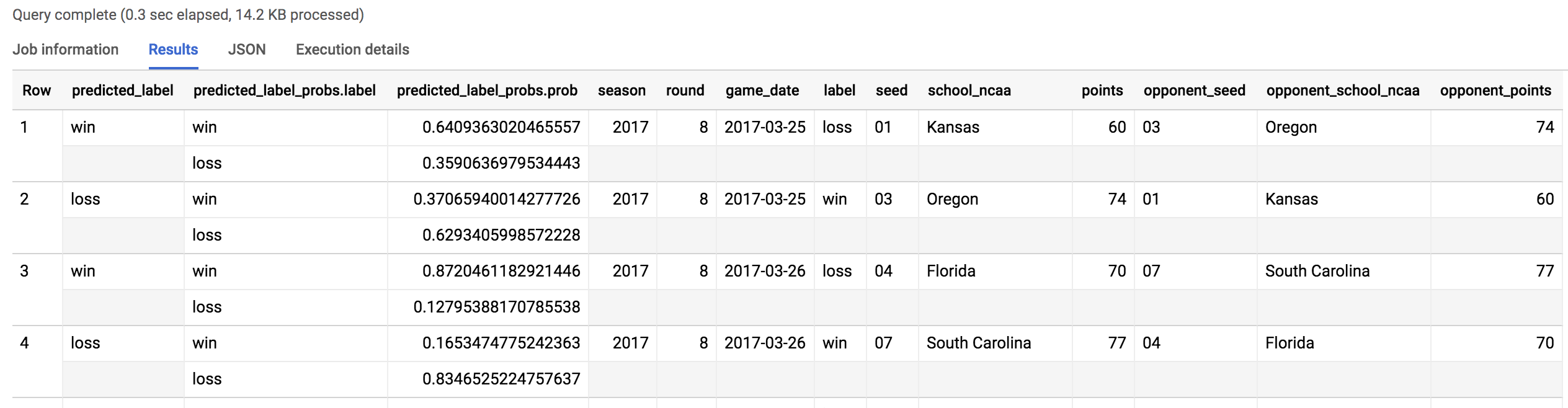

Task 9. Making predictions

Now that you trained a model on historical data up to and including the 2017 season (which was all the data you had), it's time to make predictions for the 2018 season. Your data science team has just provided you with the tournament results for the 2018 tournament in a separate table which you don't have in your original dataset.

Making predictions is as simple as calling ML.PREDICT on a trained model and passing through the dataset you want to predict on.

- Run the following command in the query EDITOR:

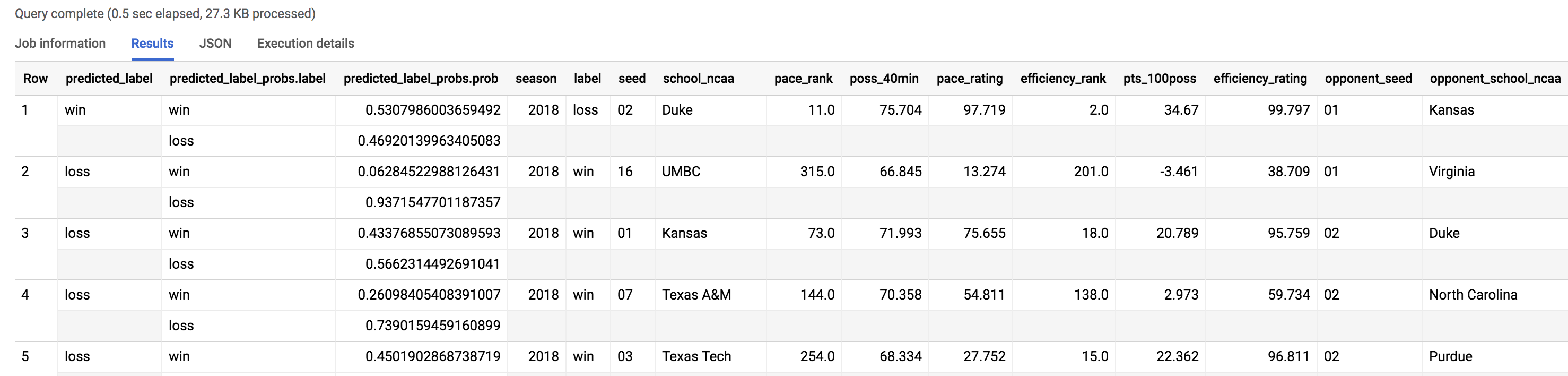

You should receive a similar output soon after:

Click Check my progress to verify the objective.

You will now see your original dataset plus the addition of three new columns:

- Predicted label

- Predicted label options

- Predicted label probability

Since you happen to know the results of the 2018 March Madness tournament, let's see how the model did with its predictions. (Tip: If you're predicting for this year's March Madness tournament, you would simply pass in a dataset with 2019 seeds and team names. Naturally, the label column will be empty as those games haven't been played yet -- that's what you're predicting!).

Task 10. How many did our model get right for the 2018 NCAA tournament?

- Run the following command in the query EDITOR:

You should receive a similar output:

Out of 134 predictions (67 March tournament games), our model got it wrong 38 times. 70% overall for the 2018 tournament matchup.

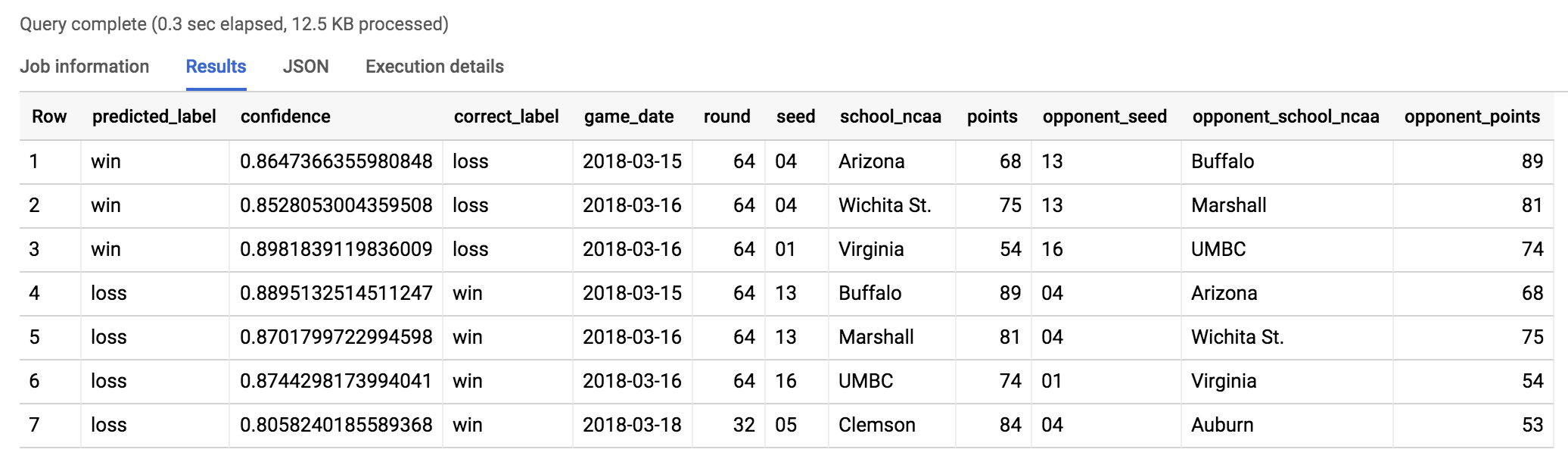

Task 11. Models can only take you so far...

There are many other factors and features that go into the close wins and amazing upsets of any March Madness tournament that a model would have a very hard time predicting.

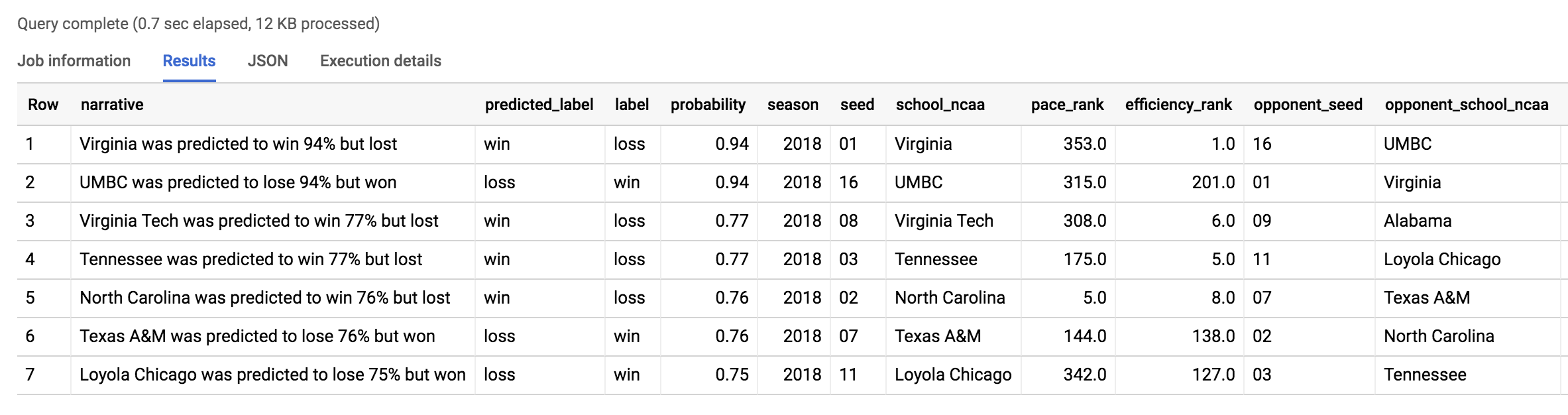

Let's find biggest upset for the 2017 tournament according to the model. We'll look where the model predicts with 80%+ confidence and gets it WRONG.

- Run the following command in the query EDITOR:

The outcome should look like this:

Coach Odom (UMBC) after the game said, "Unbelievable — it’s really all you can say." Read more about it in the 2018 UMBC vs. Virginia men's basketball game article.

Recap

- You created a machine learning model to predict game outcome.

- You evaluated the performance and got to 69% accuracy using seed and team name as primary features

- You predicted 2018 tournament outcomes

- You analyzed the results for insights

Our next challenge will be to build a better model WITHOUT using seed and team name as features.

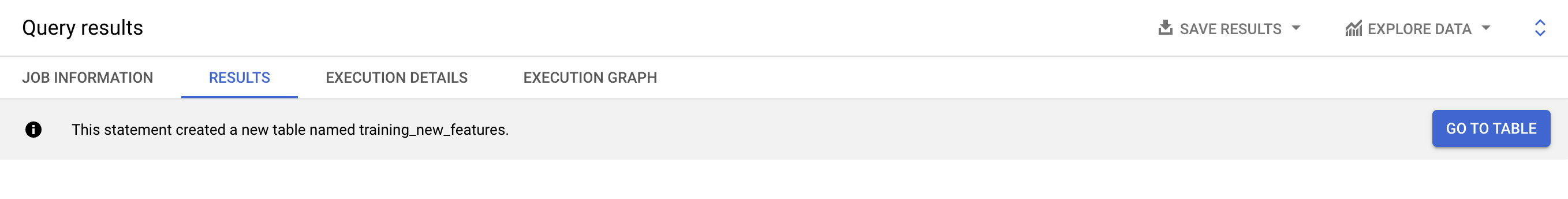

Task 12. Using skillful ML model features

In the second part of this lab you will be building a second ML model using newly provided and detailed features.

Now that you're familiar with building ML models using BigQuery ML, your data science team has provided you with a new play-by-play dataset where they have created new team metrics for your model to learn from. These include:

- Scoring efficiency over time based on historical play-by-play analysis.

- Possession of the basketball over time.

Create a new ML dataset with these skillful features

- Run the following command in the query EDITOR:

You should receive a similar output soon after:

Click Check my progress to verify the objective.

Task 13. Preview the new features

- Click on the Go to table button on the right-hand side of the console. Then click on the Preview tab.

Your table should look similar to the following:

Don't worry if your output is not identical to the above screenshot.

Task 14. Interpreting selected metrics

- You will now learn about some important labels that aid us in making predictions.

opp_efficiency_rank

Opponent's Efficiency Rank: out of all the teams, what rank does our opponent have for scoring efficiently over time (points per 100 basketball possessions). Lower is better.

opp_pace_rank

Opponent's Pace Rank: out of all teams, what rank does our opponent have for basketball possession (number of possessions in 40 minutes). Lower is better.

Now that you have insightful features on how well a team can score and how well it can hold on to the basketball lets train our second model.

As an additional measure to safe-guard your model from "memorizing good teams from the past", exclude the team's name and the seed from this next model and focus only on the metrics.

Task 15. Train the new model

- Run the following command in the query EDITOR:

Soon after, your output should look similar to the following:

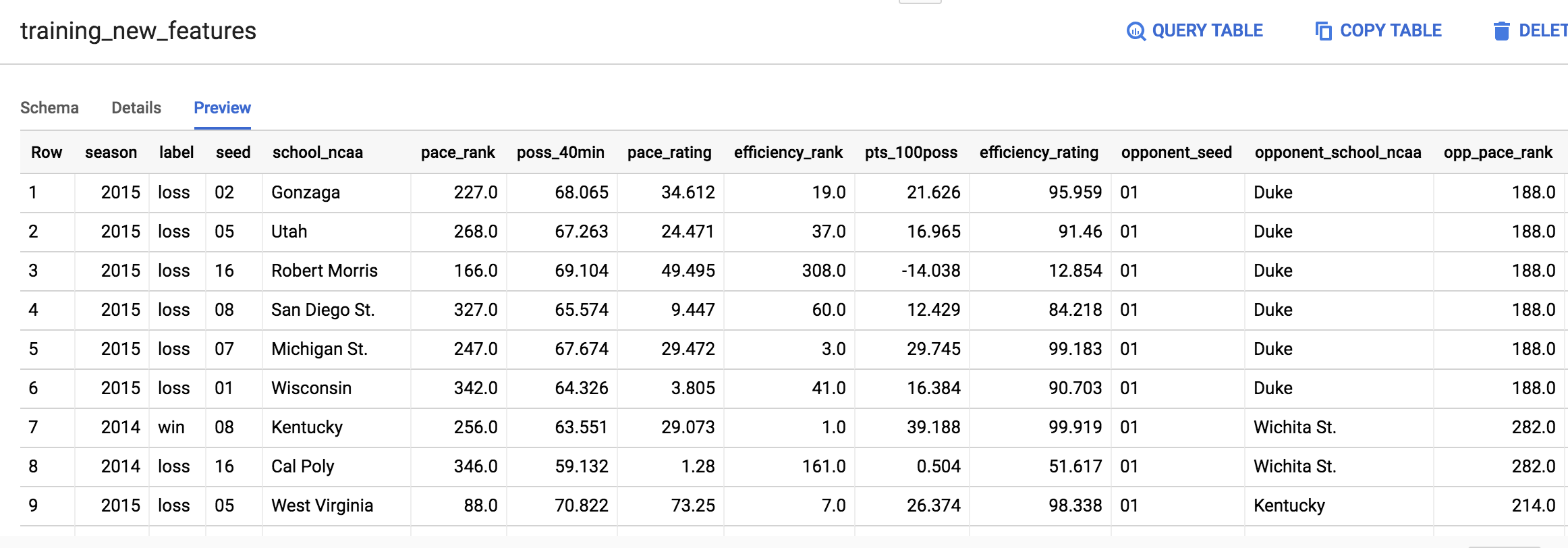

Task 16. Evaluate the new model's performance

- To evaluate your model's performance, run the following command in the query EDITOR:

Your output should look similar to the followings:

Wow! You just trained a new model with different features and increased your accuracy to around 75% or a 5% lift from the original model.

This is one of the biggest lessons learned in machine learning is that your high quality feature dataset can make a huge difference in how accurate your model is.

Click Check my progress to verify the objective.

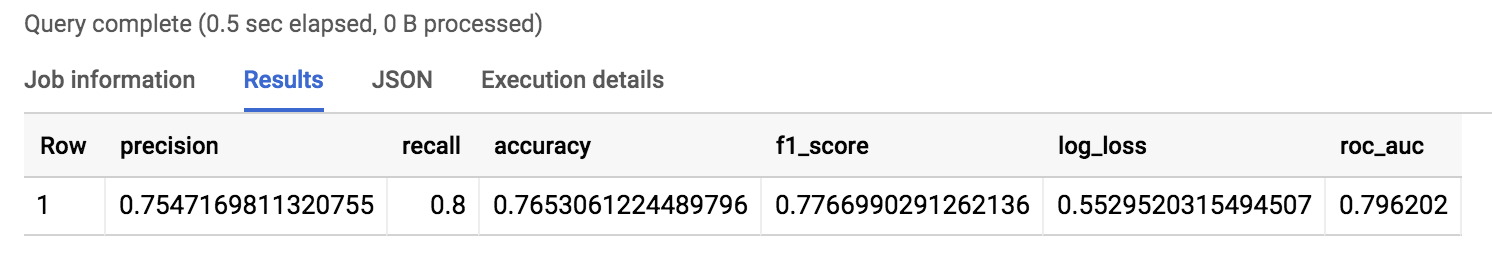

Task 17. Inspect what the model learned

- Which features does the model weigh the most in win / loss outcome? Find out by running the following command in the query EDITOR:

Your output should look like this:

We've taken the absolute value of the weights in our ordering so the most impactful (for a win or a loss) are listed first.

As you can see in the results, the top 3 are pace_stat_diff, eff_stat_diff, and eff_rating_diff. Let's explore these a little bit more.

pace_stat_diff

How different the actual stat for (possessions / 40 minutes) was between teams. According to the model, this is the largest driver in choosing the game outcome.

eff_stat_diff

How different the actual stat for (net points / 100 possessions) was between teams.

eff_rating_diff

How different the normalized rating for scoring efficiency was between teams.

What did the model not weigh heavily in its predictions? Season. It was last in the output of ordered weights above. What the model is saying is that the season (2013, 2014, 2015) isn't that useful in predicting game outcome. There wasn't anything magical about the year "2014" for all teams.

An interesting insight is that the model valued the pace of a team (how well they can control the ball) over how efficiently a team can score.

Task 18. Prediction time!

- Run the following command in the query EDITOR:

Your output should be similar to the following:

Click Check my progress to verify the objective.

Task 19. Prediction analysis:

Since you know the correct game outcome, you can see where your model made an incorrect prediction using the new testing dataset.

- Run the following command in the query EDITOR:

As you can see from the number of records returned from the query, the model got 48 matchups wrong (24 games) out of the total number of matchups in the tournament for a 2018 accuracy of 64%. 2018 must have been a wild year, let's look at what upsets happened.

Task 20. Where were the upsets in March 2018?

- Run the following command in the query EDITOR:

Your outcome should look like:

The major upset was the same one our previous model found: UMBC vs Virginia. Read more about how 2018 overall was a year of huge upsets in Has This Been the “Maddest” March? article. Will 2019 be just as wild?

Task 21. Comparing model performance

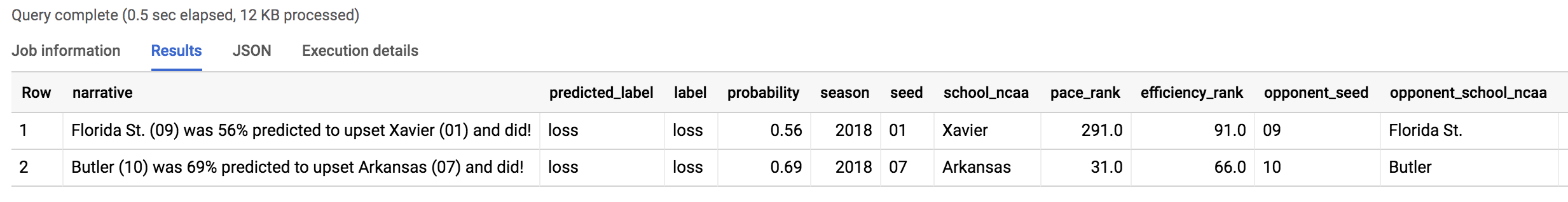

What about where the naive model (comparing seeds) got it wrong but the advanced model got it right?

- Run the following command in the query EDITOR:

Your outcome should look like:

The model predicted a Florida St. (09) upset of Xavier (01) and they did!

The upset was correctly predicted by the new model (even when the seed ranking said otherwise) based on your new skillful features like pace and shooting efficiency. Watch the game highlights on YouTube.

Task 22. Predicting for the 2019 March Madness tournament

Now that we know the teams and seed rankings for March 2019, let's predict the outcome of future games.

Explore the 2019 data

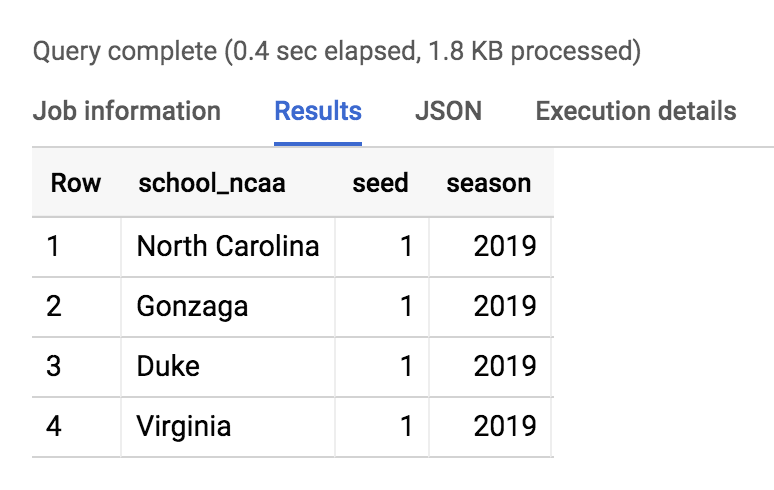

- Run the below query to find the top seeds

Your outcome should look like:

Create a matrix of all possible games

Since we don't know which teams will play each other as the tournament progresses, we'll simply have them all face each other.

In SQL, an easy way to have a single team play every other team in a table is with a CROSS JOIN.

- Run the below query to get all possible team games in the tournament.

Add in 2018 team stats (pace, efficiency)

Make predictions

Click Check my progress to verify the objective.

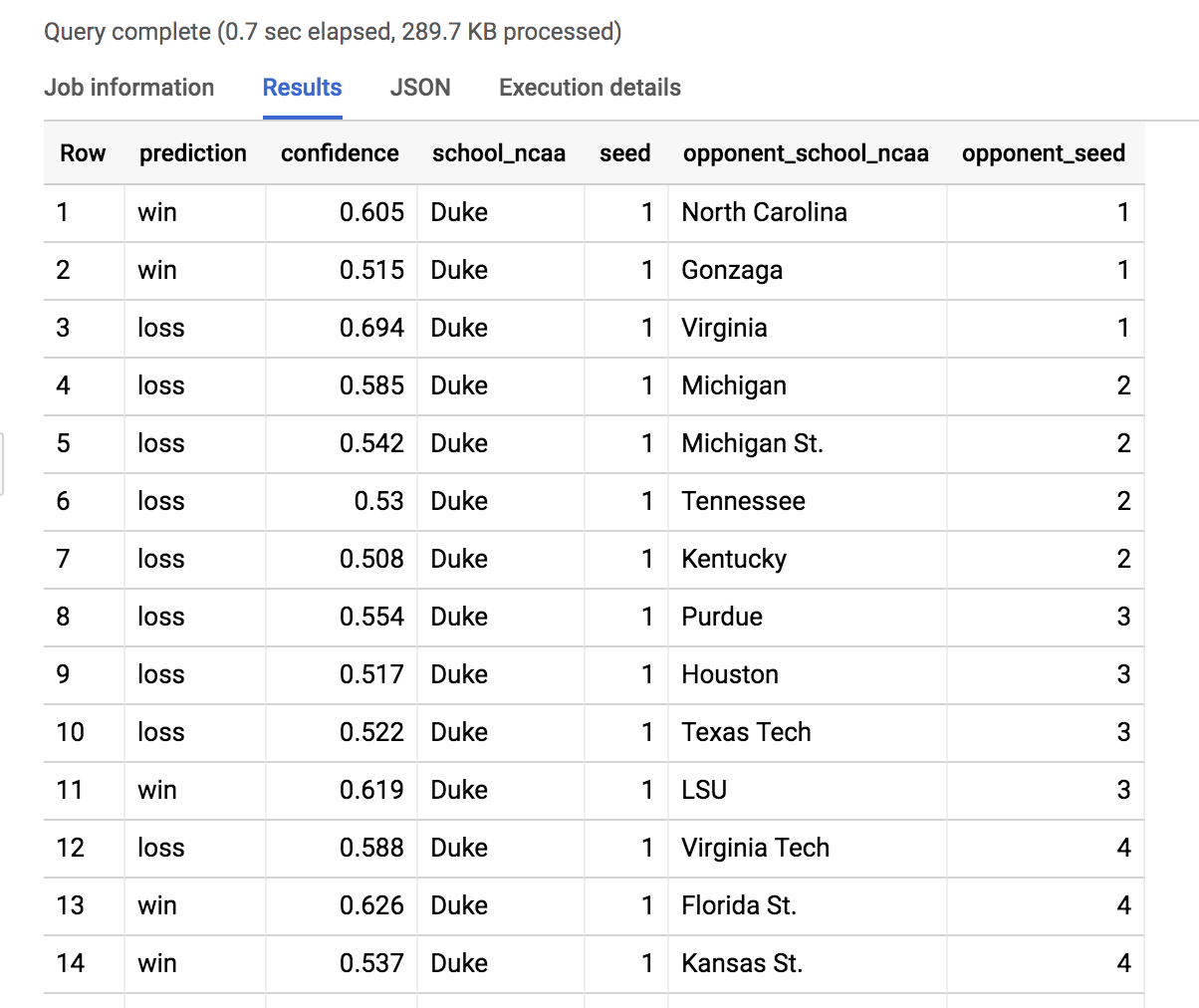

Get your predictions

- Here we filtered the model results to see all of Duke's possible games. Scroll to find the Duke vs North Dakota St. game.

Insight: Duke (1) is 88.5% favored to beat North Dakota St. (16) on 3/22/19.

Experiment by changing the school_ncaa filter above to predict for the matchups in your bracket. Write down what the model confidence is and enjoy the games!

Congratulations!

You used BigQuery ML to predict winning teams for the NCAA Men's basketball tournament.

Next steps / Learn more

- Want to learn more about basketball metrics and analysis? Check out additional analysis from team behind the Google Cloud NCAA tournament ads and predictions.

- Check out this lab:

Google Cloud training and certification

...helps you make the most of Google Cloud technologies. Our classes include technical skills and best practices to help you get up to speed quickly and continue your learning journey. We offer fundamental to advanced level training, with on-demand, live, and virtual options to suit your busy schedule. Certifications help you validate and prove your skill and expertise in Google Cloud technologies.

Manual Last Updated March 19, 2024

Lab Last Tested March 19, 2024

Copyright 2024 Google LLC All rights reserved. Google and the Google logo are trademarks of Google LLC. All other company and product names may be trademarks of the respective companies with which they are associated.